RealTime AI Agents Frameworks

By LiveTok Labs Team

RealTime AI Agents Frameworks

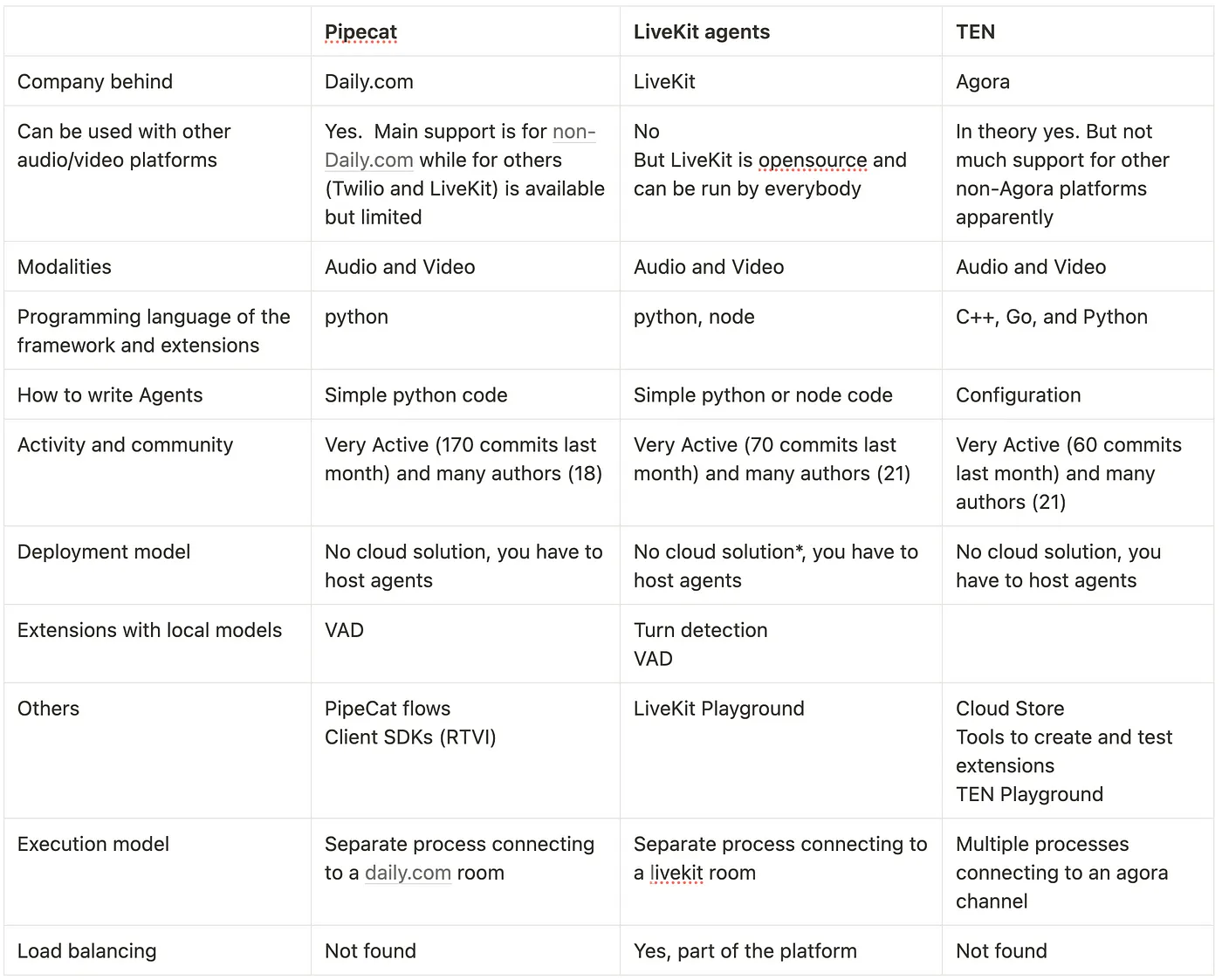

Nowadays there are multiple frameworks to orchestrate Large Language Models (LLMs) and other AI models to accomplish tasks using Audio&Video real time interfaces. These frameworks were primarily developed to provide a RealTime/WebRTC interface to existing LLM capabilities by integrating an existing RTC platform (LiveKit, Daily, Agora, Twilio…) as an intermediary.

These frameworks offer pre-built components that are combined to create pipelines or graphs. Those component include transport layers, LLM integration, Speech-to-Text (STT), Text-to-Speech (TTS), various tools, RAG support, and Voice Activity Detection (VAD)/Turn management.

With their extensive library of pre-built components, users typically focus on combining existing components rather than writing new ones.

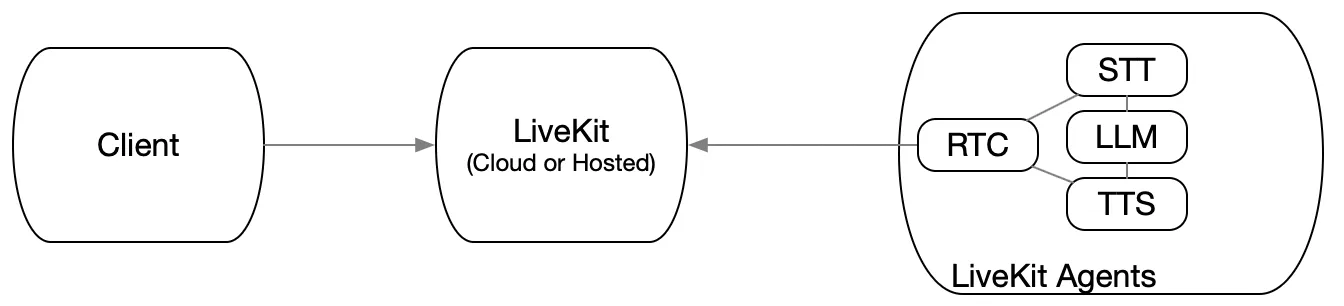

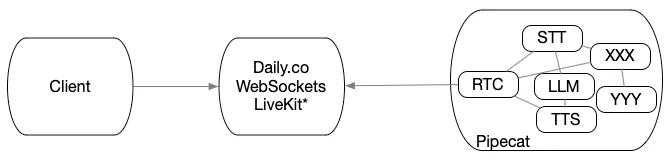

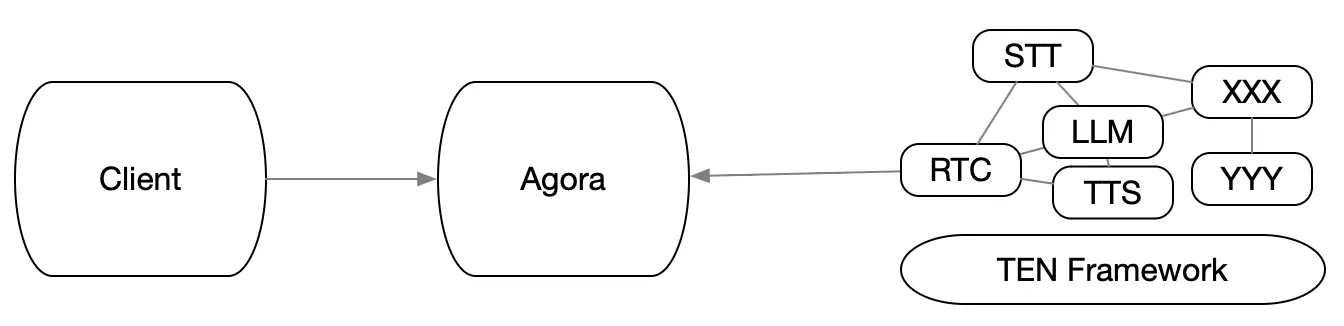

The agents architecture in most of the cases consist in a new process using the frameworks with different components connected to from pipeline/graph structures for audio and video processing. That new process connects to a room in an RTC platform like a regular client does, and then pass the audio and video frames received to that pipeline and then sends back to the platform the audio and video frames generated by the pipeline.

I took a look at the three frameworks that look more popular these days: LiveKit Agents, PipeCat and TEN Framework.

LiveKit Agents

LiveKit Agents provides a library that allows you to build agents using Python or Node.js. Those agents connect to a LiveKit server and wait for somebody to join a room to spawn a new agent instance to process the audio and video in that room.

The agents are implemented as a linear pipeline composed of 3 steps (Speech-To-Text, Large Language Model and Text-To-Speech) but it can be also used directly with speech models. There are many pre-built components to integrate with external services.

It includes very good support for turn detection and handling interruptions during conversations.

Pipecat

Pipepat provides a library that allows you to build agents using Python. Those agents connect to a Daily.co room (or a LiveKit room in audio only mode or a Twilio room with the websockets API) and join to a room to process the audio and video in it.

The agents are implemented as a more complex pipeline (for example supporting parallel pipelines) and composed of an arbitrary number of steps running “processors” that are the components that receive and generate frames. It can be also used directly with speech models like Gemini. There are many pre-built components to integrate with external services.

It also includes a wrapper called Pipecat Flows to create conversation agents in an easier way, even without writing code.

## Transformative Extensions Network (TEN)

TEN provides a very generic and low level framework to build agents or potentially any other type of application based on orchestrating multiple sub-processes and passing media and control data between them. Those agents connect to an Agora room (or any other RTC Platform if somebody builds an extension for it) and join to a room to process the audio and video in it.

The agents are implemented defining a graph connecting the different sub-processes using some JSON file configuration. There is a demo agent that can be used as reference and tune for your needs.

The sub-processes can be built in different languages like C++, Python and Go. There are many of those pre-built components to integrate with different external services.

There are also other frameworks that look also very interesting like ai01 for the dRTC platform, but I didn’t have time to play with them yet.

Comparison

Examples

These are examples of the most basic conversational agent built with the three frameworks. These are snippets and some few lines are skipped to make it more readable for comparision. You can find complete examples in the repositories of the projects.

LiveKit Agents

agent = VoicePipelineAgent(

vad=silero.VAD.load(),

stt=deepgram.STT(),

llm=openai.LLM(),

tts=catesia.TTS(),

)

participant = await ctx.wait_for_participant()

agent.start(ctx.room, participant)

### Pipecat Pipecat requires to configure many things (i.e. credentials) in the code so it tends to be more verbose than livekit. Those details are omited in the snippet.

transport = DailyTransport(

room_url,

DailyParams(

vad_analyzer=SileroVADAnalyzer()

)

)

pipeline = Pipeline([

transport.input(),

DeepgramSTTService(),

OpenAILLMService(),

CartesiaTTSService(),

transport.output()

])

task = PipelineTask(pipeline)

runner = PipelineRunner()

await runner.run(task)

Transformative Extensions Network (TEN)

The agents are defined based on a configuratio in JSON format, it is a bit verbose but a simplified version for comparision is like this:

"nodes": [

{

"addon": "agora_rtc",

},

{

"addon": "deepgram_asr_python",

},

{

"addon": "openai_chatgpt_python",

},

{

"addon": "cartesia_tts",

},

],

"connections": [

{

"extension": "agora_rtc",

"audio_frame": [

{

"name": "pcm_frame",

"dest": [

{

"extension": "deepgram_asr_python"

}

]

]

},

// Many more connections needed here

.......

]

## Conclusions and thoughts

The frameworks range from generic to feature-rich. LiveKit and Pipecat share similarities, while TEN offers a more complex, lower-level solution. All three frameworks show comparable adoption rates based on GitHub stars and activity.

TEN provides the most flexibility, while LiveKit offers the least. However, LiveKit excels in simplicity and rapid development, with Pipecat following closely behind.

Each framework has unique strengths — LiveKit, for example, features impressive custom Turn Detection and job dispatching while Pipecat and TEN provide support for other RTC platform outside of their own.

Surprisingly, none of these companies currently offer a cloud hosting solution for their agents, though LiveKit’s recent job posting suggests this might change.

These frameworks were particularly valuable when LLMs lacked native RTC interfaces with Speech-to-LLM and LLM-to-Speech capabilities. However, with OpenAI now offering GPT-4’s WebRTC interface and Gemini accessible through a simple proxy (rt-llm-proxy), it will be interesting to see how these frameworks evolve.

Thank you for the comments and fixes from David Zhao (LiveKit) and Mark Backman (). And as usual feedback is more than welcomed either here or in Twitter.